Yu-Shiang Wong

I obtained my Ph.D. at University College London, advised by Prof. Niloy J Mitra, as a member of the SmartGeometry group. During my Ph.D., I focused on dynamic scene reconstruction and collaborated with Prof. Matthias Nießner. Before that, I worked as a Research Assitant at Academia Sinica in Taiwan, supervised by Prof. Su, Keh-Yih. I completed my Master’s in Computer Science at National Tsing Hua University, at CGV Lab, supervised by Prof. Hung-Kuo Chu. My research interests include Neural representation, 3D/4D reconstruction, synthetic environment, and generative modeling.

Hosted on GitHub Pages — Theme by orderedlist

Deep Learning RnD Engineer

2023.10

I joined NVIDIA as a Deep Learning RnD engineer in Taipei and work on 3D scene understanding technology.

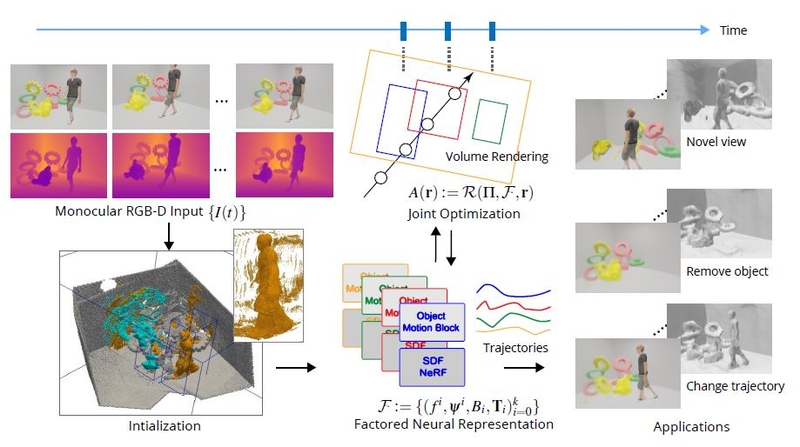

Factored Neural Representation for Scene Understanding

Symposium on Geometry Processing 2023

[Project Website]

[Arxiv]

We introduce a novel representation for reconstructing dynamic environments using monocular RGB-D input.

PhD

2023

I have successfully defended my PhD with the thesis title Dynamic Scene Reconstruction and Understanding!

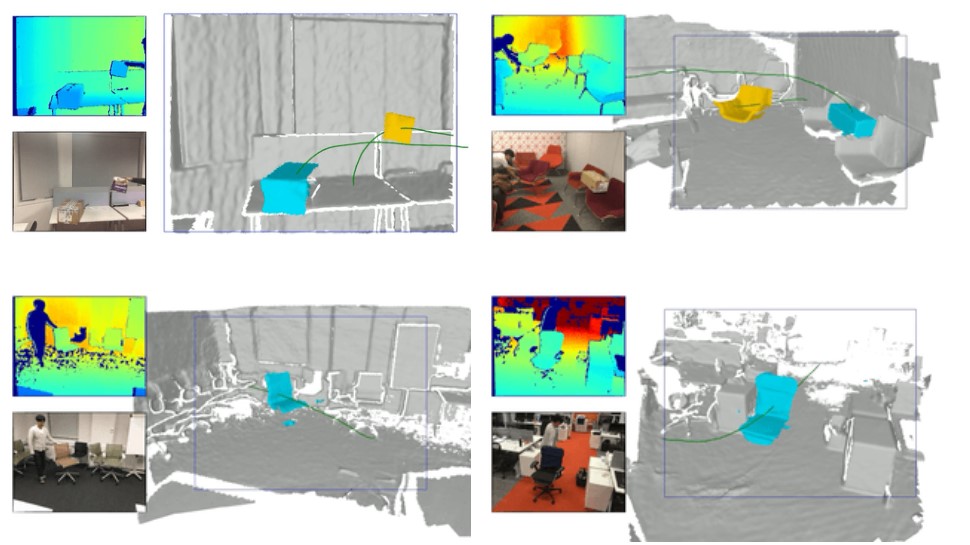

RigidFusion: RGB-D Scene Reconstruction with Rigidly-moving Objects

Eurographics 2021

[Project Website]

[Youtube]

We introduce RigidFusion. An RGB-D reconstruction system targets dynamic environments without using object priors.

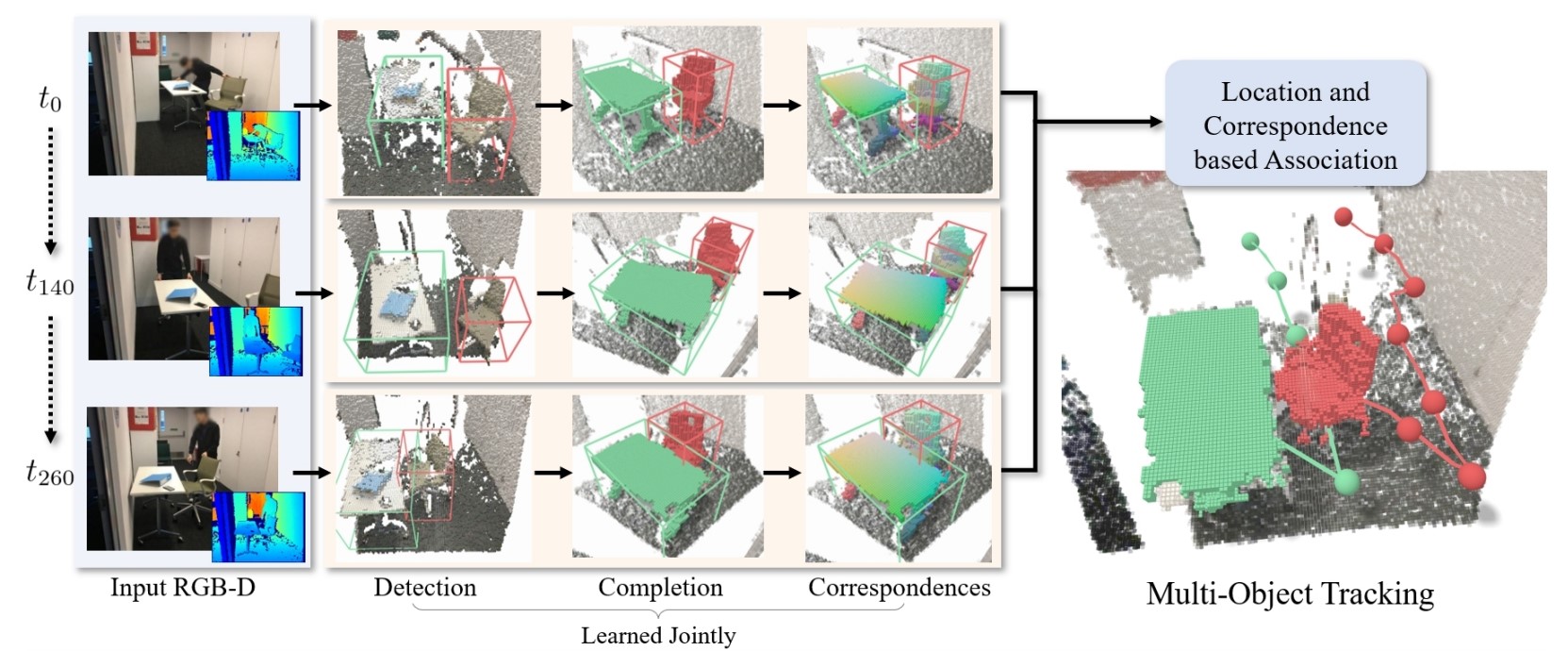

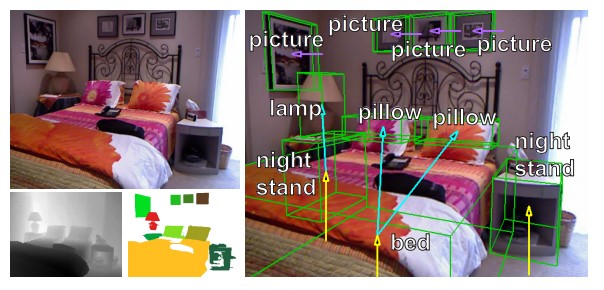

Seeing Behind Objects for 3D Multi-Object Tracking in RGB-D Sequences

CVPR 2021

[Project Website]

[Youtube]

Multi-object tracking with deep priors using RGB-D input. Our key insight is geometry completion helps tracking.

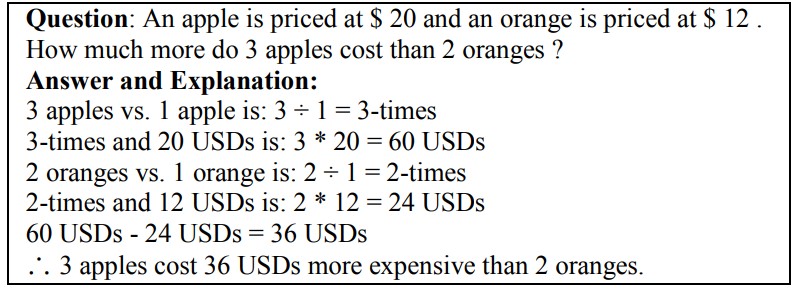

Meaning-based Statistical Math Word Problem Solver

IJCAI 2017

[Paper]

A goal-oriented meaning-based statistical framework is presented to solve the math word problem. Our system is able to generate a reasonable explanation.

SmartAnnotator: Annotating Indoor RGBD Images

Eurographics 2015

[Project Website]

[Youtube]

We present SMARTANNOTATOR, an interactive system to facilitate annotating raw RGBD images. The system performs tedious tasks and iteratively refines user annotations.

Machine Learning Toolbox

This toolbox implements common machine learning methods in MATLAB, including support vector machine (SVM) with sequential minimal optimization, Laplacian SVM, and spectral clustering.

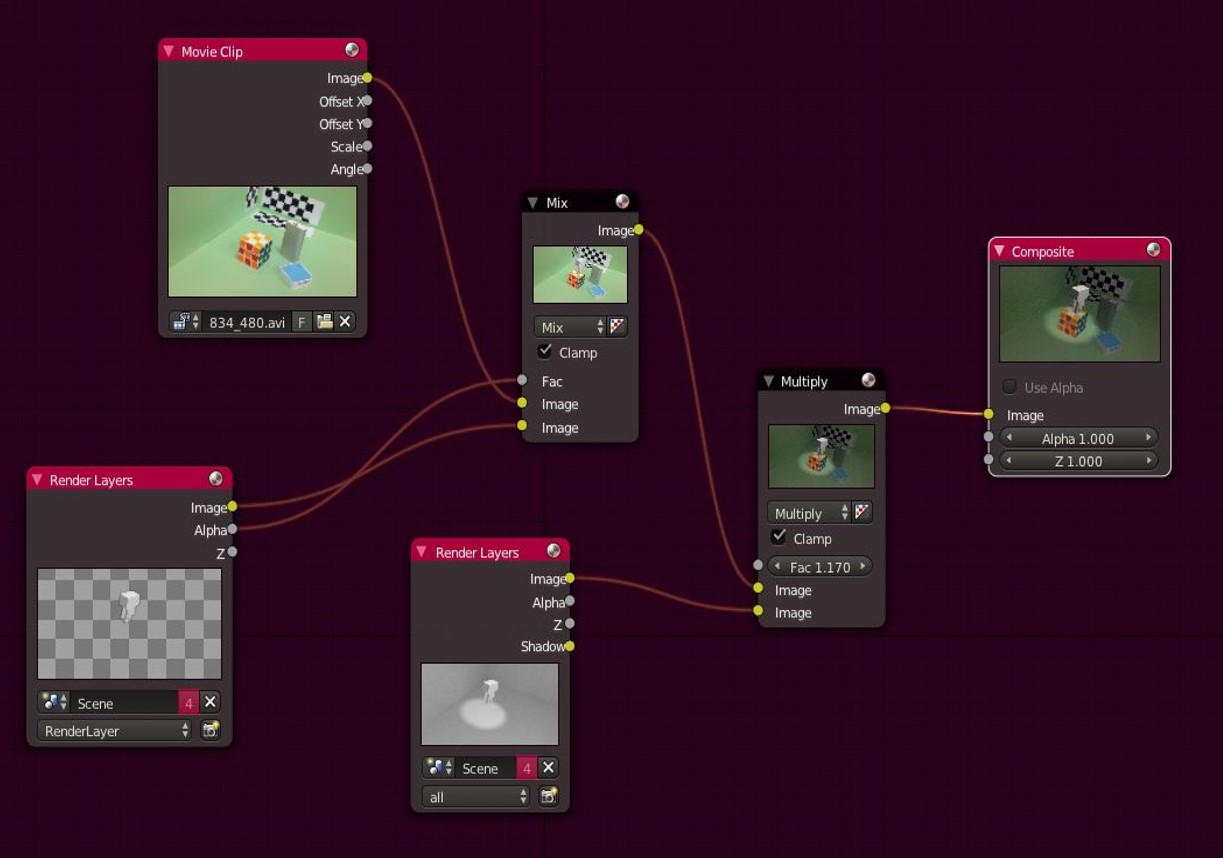

3D Object Composition

In this project, we developed a system that supports 3D object composition. It was integrated into Blender via Blender’s Python API to add special visual effects.

Youtube Video I - Object Compostion

Youtube Video II - VFX

Web-based Image Annotator

This is a web-based tool that allows users to annotate 2D images. We used these tools to collect over ninety annotations. This tool was further integrated into our single-view reconstruction system.

Software Renderer

This renderer employs a fixed-function rendering pipeline and supports: triangle rasterization, textures sampling, mipmap, and bump mapping.